2.2. Interactive, Collaborative Robots, by Danica Kragic.

Danica Kragic (Royal Institute of Technology, Stockholm, Sweden) talked about ''

Interactive, Collaborative Robots: Challenges and Opportunities''.

Despite of recent progress, robots are still:

Largely preprogrammed for their tasks, and most commercial robot applications still have a very limited ability to interact and physically engage

with humans.

In other words, something as simple as cutting bread is still challenging for robots.

And there are still no robots that can wash the dishes.

Something as simple as a good

grasp (to quickly take something in your hand and hold it firmly)

is not simple, if you are a robot...

Most robots today, still, only have 2 fingers (grippers) to work with.

Many robots generate motion strategies without any sensory feedback (for simple manipulation tasks),

which obviously only get them so far.

Still, adding visual and haptic feedback will probably allow robots to better understand object shapes, scene properties and in-hand manipulation.

But it is of course still not easy for robots to understand how pushing an object will change the

scene in cluttered environments.

Learning and collaboration might come easy to humans. But robots are obviously not quite there yet,

even though robots that are better in integrating motor and sensory channels might eventually give

us much smarter robots... Still, there is a lot to learn...

But, certainly, a great talk about recent progress.

2.3. AI and Software Engineering.

2.3.1. Jian Li et al. , Chinese University of Hong Kong.

Talked about ''

Code Completion with Neural Attention and Pointer Networks''.

Today, software tools, IDEs,

provide a set of helpful services to accelerate software development.

Intelligent code completion is one of the most useful features in IDEs, which suggests next probable code tokens,

such as method calls or object fields, based on existing code in

the context. Traditionally, code completion relies heavily on

compile-time type information to predict next tokens.

But there has also been some success for dynamically-typed languages, where researchers

have treated these languages as natural languages, and trained code completion systems

with the help of large codebases (e.g.

GitHub):

In particular, neural language models such as Recurrent Neural Networks

(RNNs) can capture sequential distributions and deep semantics.

Here the authors propose their own system, based on improvements on existing techniques.

A system which turns out to be quite effective.

Cool!

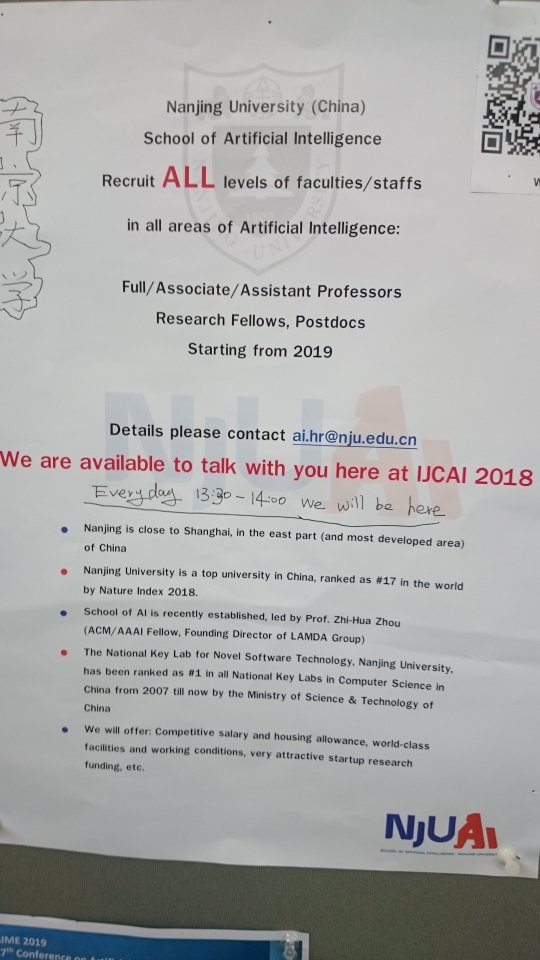

2.3.2. Hui-Hui Wei et al. , Novel Software Technology, Nanjing University.

Talked about ''

Positive and Unlabeled Learning for Detecting Software Functional Clones with

Adversarial Training''.

Where ''

clone detection'' is a vital component in todays software development, in order

to avoid having copies of the same code in several places of ones program.

Detecting pieces of codes with similar functionality (Ususally, created by reused code after copying,

pasting or modification of existing code), but looking (slightly) different is not so easy though.

Here, the authors suggest that clone detection task could be

formalized as a

Positive-Unlabeled problem, and indicate that they had some success with it.

Again: Interesting!

2.3.3. Krzysztof Krawiec et al. , Poznan University of Technology, Poland.

Talked about ''

Counterexample-Driven Genetic Programming: Stochastic Synthesis of Provably

Correct Programs''.

Or..., can we find a program that matches these

input-output examples?

Here it is suggested that

Genetic programming is an effective technique for inductive synthesis of programs from tests, i.e.

training examples of desired input-output behavior. (But) Programs synthesized in this way are not

guaranteed to generalize beyond the training set...

The authors then describe an improvement over such a (too) ''

simplistic'' Genetic Algorithm approach.

An improvement, that synthesizes correct programs fast, using few examples.

In the authors word:

This work may pave the way for effective hybridization of heuristic search methods like GP with spec-based synthesis.

For more about the context for this work, see also the

Sygus Competition.